First we will add some animated stand in geometry, a swinging door that matches the footage so that we can catch reflections on the surface to later use in comp. Create another mip_matteshadow with generic Lambert Holder shader (because you need the SG only) that catches refl, AO, and shadow for application only to the door stand-in geo.

Rendering out the file in the least possible number of render layers, with all needed render passes. Render layers take more render time, while passes do not use more time. As often as possible try two render layers only, fg and bg, then many passes, shadows, indirect, reflection, and 2D motion vectors.

Should we render with 3D or 2D motion blur?

The 3d blur is a true rendering (much slower) of the object as it moves along the time axis. The 2d blur is a simulation of this effect by taking a still image and streaking it along the 2d on-screen motion vector.

There are three principally different methods:

- Raytraced 3d motion blur

This is most common, but slowest to render. For film, with renderfarm, usually do this.

- Fast rasterizer (aka. "rapid scanline") 3d motion blur

Becoming less common especially now with Unified Sampling render solutions.

- Post processing 2d motion blur

We will try this method because it does render fastest, sometimes it will not make a difference

There are 4 different ways to get out your motion vectors out, for use in a 2D package.

1) Create Render Pass -> 2D motion vector, 3D motion vector, normalized 2D motion vector

they have other names as associated passes -> mv2DToxik, mv3D, mv2DNormRemap

This is my preferred and more recent method, because it works and is easy.

2) ReelSmart Motion Blur - RSMB, mental ray shader to output 2D motion vectors

Before Maya 2009 released native 2D motion vectors, this was common at work.

You need the free plugin for Maya, and a paid plugin for whatever composite package.

3) mip_motion_vector shader, the purpose of which is to export motion in pixel space (mental ray's standard motion vector format is in world space) encoded as a color, blur in the comp.

Most third party tools expect the motion vector encoded as colors where red is the X axis and green is the Y axis, and in some cases, not this MR, leaving blue as the magnitude of the blur.

4) mip_motionblur shader, for performing 2.5D motion blur as a post process.

Good description of the ReelSmart motion blur shader compared to Mental Ray 2D vectors.

Example case of keeping your bty and your 2D motion vector in sync, then smearing in Nuke

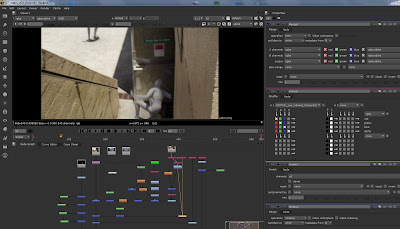

Nuke compositing the separate render passes.

Now we combine our original plate, (not the one rendered from Maya), with its related shadow, refl, and indirect passes. Then we can merge the animated character on top.

shuffle, roto, color correct, white balance, vectorBlur